28 Apr 2021 | Magazine, Magazine Editions, Volume 50.01 Spring 2021

Author

Ma Jian is an award-winning Chinese writer. His latest novel is China Dream. His work is banned in China

Singer

Gelareh Sheibani was born in Iran and took keyboard lessons as a youngter. She found a passion for singing in her teenage years but solo singing in the country was not permitted. After the release of the video for her song Nagoo Tanhaei and the follow-up she was arrested and prosecuted. She later left the country and now lives in Turkey

Journalist

Sarah Sands is Chair of the Gender Equality Advisory Council for G7 and a board member of Index on Censorship. Sands was the former editor of BBC’s Today programme

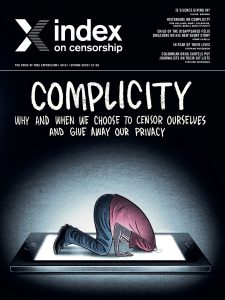

30 Mar 2020 | Magazine, Magazine Contents, Volume 49.01 Spring 2020

[vc_row][vc_column][vc_custom_heading text="With contributions from Ak Welsapar, Julian Baggini, Alison Flood, Jean-Paul Marthoz and Victoria Pavlova"][/vc_column][/vc_row][vc_row][vc_column][vc_column_text]

The Spring 2020 issue of Index on Censorship magazine looks at our own role in free speech violations. In this issue we talk to Swedish people who are willingly having microchips inserted under their skin. Noelle Mateer writes about living in China as her neighbours, and her landlord, embraced video surveillance cameras. The historian Tom Holland highlights the best examples from the past of people willing to self-censor. Jemimah Steinfeld discusses holding back from difficult conversations at the dinner table, alongside interviewing Helen Lewis on one of the most heated conversations of today. And Steven Borowiec asks why a North Korean is protesting against the current South Korean government. Plus Mark Frary tests the popular apps to see how much data you are knowingly - or unknowingly - giving away.

The Spring 2020 issue of Index on Censorship magazine looks at our own role in free speech violations. In this issue we talk to Swedish people who are willingly having microchips inserted under their skin. Noelle Mateer writes about living in China as her neighbours, and her landlord, embraced video surveillance cameras. The historian Tom Holland highlights the best examples from the past of people willing to self-censor. Jemimah Steinfeld discusses holding back from difficult conversations at the dinner table, alongside interviewing Helen Lewis on one of the most heated conversations of today. And Steven Borowiec asks why a North Korean is protesting against the current South Korean government. Plus Mark Frary tests the popular apps to see how much data you are knowingly - or unknowingly - giving away.

In our In Focus section, we sit down with different generations of people from Turkey and China and discuss with them what they can and cannot talk about today compared to the past. We also look at how as world demand for cocaine grows, journalists in Colombia are increasingly under threat. Finally, is internet browsing biased against LBGTQ stories? A special Index investigation.

Our culture section contains an exclusive short story from Libyan writer Najwa Bin Shatwan about an author changing her story to people please, as well as stories from Argentina and Bangladesh.

[/vc_column_text][/vc_column][/vc_row][vc_row][vc_column][vc_custom_heading text="Special Report"][/vc_column][/vc_row][vc_row][vc_column][vc_column_text]Willingly watched by Noelle Mateer: Chinese people are installing their own video cameras as they believe losing privacy is a price they are willing to pay for enhanced safety

The big deal by Jean-Paul Marthoz: French journalists past and present have felt pressure to conform to the view of the tribe in their reporting

Don't let them call the tune by Jeffrey Wasserstrom: A professor debates the moral questions about speaking at events sponsored by an organisation with links to the Chinese government

Chipping away at our privacy by Nathalie Rothschild: Swedes are having microchips inserted under their skin. What does that mean for their privacy?

There's nothing wrong with being scared by Kirsten Han: As a journalist from Singapore grows up, her views on those who have self-censored change

How to ruin a good dinner party by Jemimah Steinfeld: We’re told not to discuss sex, politics and religion at the dinner table, but what happens to our free speech when we give in to that rule?

Sshh... No speaking out by Alison Flood: Historians Tom Holland, Mary Fulbrook, Serhii Plokhy and Daniel Beer discuss the people from the past who were guilty of complicity

Making foes out of friends by Steven Borowiec: North Korea’s grave human rights record is off the negotiation table in talks with South Korea. Why?

Nothing in life is free by Mark Frary: An investigation into how much information and privacy we are giving away on our phones

Not my turf by Jemimah Steinfeld: Helen Lewis argues that vitriol around the trans debate means only extreme voices are being heard

Stripsearch by Martin Rowson: You’ve just signed away your freedom to dream in private

Driven towards the exit by Victoria Pavlova: As Bulgarian media is bought up by those with ties to the government, journalists are being forced out of the industry

Shadowing the golden age of Soviet censorship by Ak Welsapar: The Turkmen author discusses those who got in bed with the old regime, and what’s happening now

Silent majority by Stefano Pozzebon: A culture of fear has taken over Venezuela, where people are facing prison for being critical

Academically challenged by Kaya Genç: A Turkish academic who worried about publicly criticising the government hit a tipping point once her name was faked on a petition

Unhealthy market by Charlotte Middlehurst: As coronavirus affects China’s economy, will a weaker market mean international companies have more power to stand up for freedom of expression?

When silence is not enough by Julian Baggini: The philosopher ponders the dilemma of when you have to speak out and when it is OK not to[/vc_column_text][/vc_column][/vc_row][vc_row][vc_column][vc_custom_heading text="In Focus"][vc_column_text]Generations apart by Kaya Genç and Karoline Kan: We sat down with Turkish and Chinese families to hear whether things really are that different between the generations when it comes to free speech

Crossing the line by Stephen Woodman: Cartels trading in cocaine are taking violent action to stop journalists reporting on them

A slap in the face by Alessio Perrone: Meet the Italian journalist who has had to fight over 126 lawsuits all aimed at silencing her

Con (census) by Jessica Ní Mhainín: Turns out national censuses are controversial, especially in the countries where information is most tightly controlled

The documentary Bolsonaro doesn't want made by Rachael Jolley: Brazil’s president has pulled the plug on funding for the TV series Transversais. Why? We speak to the director and publish extracts from its pitch

Queer erasure by Andy Lee Roth and April Anderson: Internet browsing can be biased against LGBTQ people, new exclusive research shows[/vc_column_text][/vc_column][/vc_row][vc_row][vc_column][vc_custom_heading text="Culture"][vc_column_text]Up in smoke by Félix Bruzzone: A semi-autobiographical story from the son of two of Argentina’s disappeared

Between the gavel and the anvil by Najwa Bin Shatwan: A new short story about a Libyan author who starts changing her story to please neighbours

We could all disappear by Neamat Imam: The Bangladesh novelist on why his next book is about a famous writer who disappeared in the 1970s[/vc_column_text][/vc_column][/vc_row][vc_row][vc_column][vc_custom_heading text="Index around the world"][vc_column_text]Demand points of view by Orna Herr: A new Index initiative has allowed people to debate about all of the issues we’re otherwise avoiding[/vc_column_text][/vc_column][/vc_row][vc_row][vc_column][vc_custom_heading text="Endnote"][vc_column_text]Ticking the boxes by Jemimah Steinfeld: Voter turnout has never felt more important and has led to many new organisations setting out to encourage this. But they face many obstacles[/vc_column_text][/vc_column][/vc_row][vc_row][vc_column width="1/3"][vc_custom_heading text="Subscribe"][vc_column_text]In print, online, in your mailbox, on your iPad.

Subscription options from £18 or just £1.49 in the App Store for a digital issue.

Every subscriber helps support Index on Censorship's projects around the world.

SUBSCRIBE NOW[/vc_column_text][/vc_column][vc_column width="1/3"][vc_custom_heading text="Read"][vc_column_text]The playwright Arthur Miller wrote an essay for Index in 1978 entitled The Sin of Power. We reproduce it for the first time on our website and theatre director Nicholas Hytner responds to it in the magazine

SUBSCRIBE NOW[/vc_column_text][/vc_column][vc_column width="1/3"][vc_custom_heading text="Read"][vc_column_text]The playwright Arthur Miller wrote an essay for Index in 1978 entitled The Sin of Power. We reproduce it for the first time on our website and theatre director Nicholas Hytner responds to it in the magazine

READ HERE[/vc_column_text][/vc_column][vc_column width="1/3"][vc_custom_heading text="Listen"][vc_column_text]In the Index on Censorship autumn 2019 podcast, we focus on how travel restrictions at borders are limiting the flow of free thought and ideas. Lewis Jennings and Sally Gimson talk to trans woman and activist Peppermint; San Diego photojournalist Ariana Drehsler and Index's South Korean correspondent Steven Borowiec

LISTEN HERE[/vc_column_text][/vc_column][/vc_row]

6 Jun 2019 | Campaigns -- Featured, Digital Freedom, Digital Freedom Statements, Statements

[vc_row][vc_column][vc_column_text]Social media platforms have enormous influence over what we see and how we see it.

We should all be concerned about the knee-jerk actions taken by the platforms to limit legal speech and approach with extreme caution any solutions that suggest it’s somehow easy to eliminate only “bad” speech.

Those supporting the removal of videos that "justify discrimination, segregation or exclusion based on qualities like age, gender, race, caste, religion, sexual orientation or veteran status" might want to pause to consider that it isn't just content about conspiracy theories or white supremacy that will be removed.

In the wake of YouTube's announcement on Wednesday 5 June, independent journalist Ford Fischer tweeted that some of his videos, which report on activism and extremism, had been flagged by the service for violations. Teacher Scott Allsopp had his channel featuring hundreds of historical clips deleted for breaching the rules that ban hate speech, though it was later restored with some videos still flagged.

It's not just Google's YouTube that has tripped over the inconsistent policing of speech online.

Twitter has removed tweets for violating its community standards as in the case of US high school teacher and activist Carolyn Wysinger, whose post in response to actor Liam Neeson saying he’d roamed the streets hunting for black men to harm, was deleted by the platform. “White men are so fragile,” the post read, “and the mere presence of a black person challenges every single thing in them.”

In the UK, gender critical feminists who have quoted academic research on sex and gender identity have had their Twitter accounts suspended for breaching the organisation’s hateful conduct policy, while threats of violence towards women often go unpunished.

Facebook, too, has suspended the pages of organisations that have posted about racist behaviours.

If we are to ensure that all our speech is protected, including speech that calls out others for engaging in hateful conduct, then social media companies' policies and procedures need to be clear, accountable and non-partisan. Any decisions to limit content should be taken by, and tested by, human beings. Algorithms simply cannot parse the context and nuance sufficiently to distinguish, say, racist speech from anti-racist speech.

We need to tread carefully. While an individual who incites violence towards others should not (and does not) enjoy the protection of the law, on any platform, or on any kind of media, tackling those who advocate hate cannot be solved by simply banning them.

In the drive to stem the tide of hateful speech online, we should not rush to welcome an ever-widening definition of speech to be banned by social media.

This means we – as users – might have to tolerate conspiracy theories, the offensive and the idiotic, as long as it does not incite violence. That doesn’t mean we can’t challenge them. And we should.

But the ability to express contrary points of view, to call out racism, to demand retraction and to highlight obvious hypocrisy depend on the ability to freely share information.[/vc_column_text][vc_basic_grid post_type="post" max_items="4" element_width="6" grid_id="vc_gid:1560160119940-326df768-f230-4" taxonomies="4883"][/vc_column][/vc_row]

10 May 2019 | Campaigns -- Featured

[vc_row][vc_column][vc_column_text]

Mark Zuckerberg at TechCrunch Disrupt 2012. Credit: JD Lasica

We, the undersigned, welcome the consultation on Facebook’s draft charter for the proposed oversight board. The individuals and organisations listed below agree that the following six comments highlight essential aspects of the design and implementation of the new board, and we urge Facebook to consider them fully during their deliberations.

The board should play a meaningful role in developing and modifying policies: The draft charter makes reference to the relationship between the board and Facebook when it comes to the company’s content moderation policies (i.e. that “Facebook takes responsibility for our (...) policies” and “is ultimately responsible for making decisions related to policy, operations and enforcement” but that (i) Facebook may also seek policy guidance from the board, (ii) the board’s decisions can be incorporated into Facebook’s policy development process, and (iii) the board’s decisions “could potentially set policy moving forward”. As an oversight board, and given that content moderation decisions are ultimately made on the basis of the policies which underpin them, it is critical that the board has a clear and meaningful role when it comes to developing and modifying those underlying Terms of Service/policies. For example, the board must be able to make recommendations to Facebook and be consulted on changes to key policies that significantly impact the moderation of user content. If Facebook wishes to decline to adopt the board’s recommendations, it should set out its reasoning in writing. Providing the board with such policy-setting authority would also help legitimize the board, and ensure it is not viewed as simply a mechanism for Facebook to shirk responsibility for making challenging content-related decisions.

To ensure independence, the board should establish its own rules of operation: Facebook’s final charter is unlikely to contain all of the details of the board’s internal procedural rules and working methods. In any event, it should be for the board itself to establish those rules and working methods, if it is to be sufficiently independent. Such rules and working methods might include how it will choose which cases to hear, how it will decide who will sit on panels, how it will make public information about the status of cases and proceedings, and how it will solicit and receive external evidence and expertise. The final charter should therefore set out that the board will be able to develop and amend its own internal procedural rules and working methods.

Independence of the board and its staff: The draft charter makes reference to a “full- time staff, which will serve the board and ensure that its decisions are implemented”. This staff will therefore have a potentially significant role, particularly if it is in any way involved in reviewing cases and liaising between the board and Facebook when it comes to implementation of decisions. The draft charter does not, however, set out much detail on the role and powers that this staff will have. The final charter should provide clarity on the role and powers of this staff, including how Facebook will structure the board to maintain the independence of the board and its staff.

Ability for journalists, advocates and interested citizens to raise issues of concern: At present, issues can only be raised to the board via Facebook’s own content decision- making processes and “Facebook users who disagree with a decision”. This suggests that only users who are appealing decisions related to their content can play this role. However, it is important that there also be a way for individuals (such as journalists, advocates and interested citizens) to be able to influence problematic policy and raise concerns directly to the board.

Ensuring diverse board representation: According to the Draft Charter, “the board will be made of experts with experience in content, privacy, free expression, human rights, journalism, civil rights, safety and other relevant disciplines” and “will be made up of a diverse set of up to 40 global experts”. While it is important for this board to reflect a diversity of disciplines, it is also integral that it reflects a diversity of global perspectives including different regional, linguistic and cultural perspectives from the various countries in which Facebook operates. The exact board composition will also be dependent upon the agreed scope of the board.

Promoting greater transparency around content regulation practices: Given that the board is a newfound mechanism for regulating content on Facebook and enforcing the company’s content policies, it should similarly seek to demonstrate transparency and be held accountable for its content-related practices. According to the Draft Charter, panel “decisions will be made public with all appropriate privacy protections for users” and “the board will have two weeks to issue an explanation for each decision.” In addition to providing transparency around individual board decisions, Facebook should issue a transparency report that provides granular and meaningful data including statistical data on the number of posts and accounts removed and impacted.

Organisational Signatories

AfroLeadership Center for Democracy & Technology Center for Studies on Freedom of Expression CELE Centre for Communication Governance at National Law University Delhi Committee to Protect Journalists Derechos Digitales Digital Empowerment Foundation Fundación Karisma Global Partners Digital Index on Censorship International Media Support Internet Sans Frontières Internews IPANDETEC New America’s Open Technology Institute Paradigm Initiative PEN America R3D: Red en Defensa de los Derechos Digitales Ranking Digital Rights SMEX Software Freedom Law Center, India Trillium Asset Management, LLC

Individual Signatories

Jessica Fjeld Meg Roggensack Molly Land

Notes to editors

For further information, please contact Charles Bradley, Executive Director at Global Partners Digital ([email protected]). [/vc_column_text][/vc_column][/vc_row][vc_row][vc_column][vc_basic_grid post_type="post" max_items="4" element_width="6" grid_id="vc_gid:1557483094212-3285f4a4-f83a-5" taxonomies="136"][/vc_column][/vc_row]

The Spring 2020 issue of Index on Censorship magazine looks at our own role in free speech violations. In this issue we talk to Swedish people who are willingly having microchips inserted under their skin. Noelle Mateer writes about living in China as her neighbours, and her landlord, embraced video surveillance cameras. The historian Tom Holland highlights the best examples from the past of people willing to self-censor. Jemimah Steinfeld discusses holding back from difficult conversations at the dinner table, alongside interviewing Helen Lewis on one of the most heated conversations of today. And Steven Borowiec asks why a North Korean is protesting against the current South Korean government. Plus Mark Frary tests the popular apps to see how much data you are knowingly - or unknowingly - giving away.

The Spring 2020 issue of Index on Censorship magazine looks at our own role in free speech violations. In this issue we talk to Swedish people who are willingly having microchips inserted under their skin. Noelle Mateer writes about living in China as her neighbours, and her landlord, embraced video surveillance cameras. The historian Tom Holland highlights the best examples from the past of people willing to self-censor. Jemimah Steinfeld discusses holding back from difficult conversations at the dinner table, alongside interviewing Helen Lewis on one of the most heated conversations of today. And Steven Borowiec asks why a North Korean is protesting against the current South Korean government. Plus Mark Frary tests the popular apps to see how much data you are knowingly - or unknowingly - giving away.